AI Stack

$1,928.00 BCM957608-P2200GQF00 Dual Port QSFP112 200G Ethernet

$1,928.00 BCM957608-P2200GQF00 Dual Port QSFP112 200G Ethernet

Couldn't load pickup availability

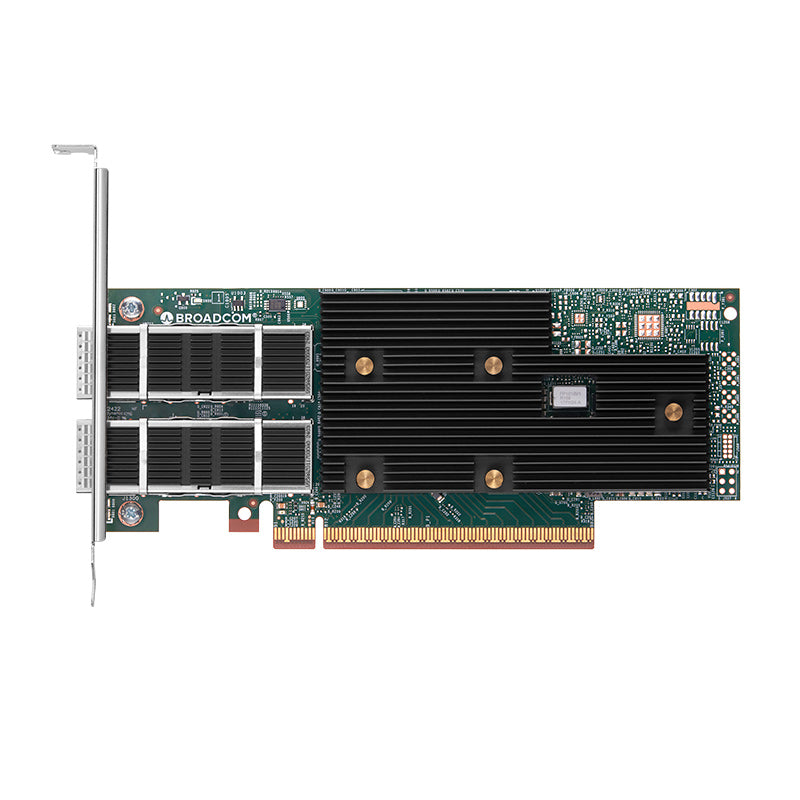

$1,928.00 Broadcom® BCM957608-P2200GQF00 Ethernet Network Interface Card, 200G Dual-Port QSFP112, PCIe 5.0 x 16, Tall and Short Bracket

The Broadcom BCM957608-P2200GQF00 represents a flexible dual-port 200G Ethernet adapter that exemplifies modern data center networking requirements.

Architecture and Performance:

The adapter leverages Broadcom's proven 400G Ethernet controller foundation, splitting it into two 200G ports. This approach provides flexibility for high-density server deployments while maintaining the performance benefits of the larger controller architecture. The hardware acceleration engines are particularly noteworthy as they offload network processing tasks from the CPU, which is crucial in AI/ML workloads where every CPU cycle counts for computation.

RDMA and Congestion Control:

The BCM957608-P2200GQF00 is equipped with fourth-generation RoCE (RDMA over Converged Ethernet) implementation with hardware-based congestion control is a significant advancement. Traditional software-based congestion control can introduce latency and consume CPU resources. Broadcom's hardware approach not only reduces latency but also simplifies large-scale deployments by handling congestion management at the silicon level, eliminating the need for complex software tuning.

TruFlow™ Technology:

The TruFlow feature is designed to enhance virtualization efficiency by offloading flow processing from the host CPU. In environments with high VM density, this translates to better resource utilization and improved overall server performance, making it particularly valuable for cloud service providers.

Security Integration:

The silicon-based root of trust (RoT) for the BCM957608-P2200GQF00 comes with secure boot and attestation capabilities addresses the growing security concerns in data center environments. This hardware-anchored security approach provides a more robust foundation than software-only solutions, especially important for sensitive AI/ML workloads and cloud infrastructure.

Deployment:

The BCM957608-P2200GQF00 targets the intersection of high-performance computing, AI/ML acceleration, and cloud infrastructure where network performance can be a critical bottleneck. The 200G per port configuration offers a sweet spot between the bandwidth requirements of modern applications and the practical considerations of cable management and switch port density in large deployments.

The emphasis on "mega-scale data center networks" make the BCM957608-P2200GQF00 ideal for hyperscale environments where network efficiency directly impacts operational costs and service quality.

Features:

Network Interface:

8 SerDes capable of 100/50G PAM4 and 25G NRZ

QSFP112 support

1x 400GbE

2x 200/100/50/25GbE

400Gb/s total bandwidth

Auto-negotiation with auto-detect

IEEE-1588v2

Host Interface:

16 lanes of PCI Express 5.0

Link rates: 32, 16, 8, 5, 2.5 GT/s

Lane configuration: x16, x8, x4, x2, and x1

MSI-X support

Platform Security:

HW Secure Boot (RoT)

Attestation (SPDM)

OCP Secure Recovery

Secure Wipe and Restore

OCP Silver Security Badge (Cert Pending)

RoCEv2:

Standards-based

DCQCN

Peer Memory Direct

Smart Congestion Control and Advanced Telemetry

Automated Configuration

Networking/Virtualizations and Accelerations:

Multi-Queue, NetQueue, and VMQ

Single Root I/O Virtualization

VF isolation and protection

VXLAN, GRE, NVGRE, Geneve, and IP-in-IP

Tunnel-aware stateless offloads

Edge Virtual Bridging (EVB)

Stateless TCP offloads: IP/TCP/UDP checksum, LSO,

LRO, GRO, TSS, RSS, aRFS, Interrupt coalescing kTLS hardware offload encryption/decryption support QUIC hardware offload encryption/decryption support

TruFlow Flow Processing:

Flexible matching key

NAT and NAPT

Tunnel encap/decap

Custom tunnel processing

Connection tracking

Flow aging

Sampling and mirroring

Rate-limiting and metering

Flow-based statistics

Network Traffic Hairpin

Manageability:

Network Controller Sideband Interface (NC-SI)

Management Component Transport Protocol (MCTP)

MCTP over SMBus/I2C

MCTP over PCIe VDM

NC-SI over MCTP

Platform Level Data Model (PLDM): Base, Monitoring/Control & FW update

PLDM over MCTP

I2C support for device control and configuration

Network Boot:

UEFI PXE boot

UEFI L2 iSCSI boot

UEFI support for x86

Design Specification:

Conforms to PCI-SIG CEM

Summary:

The Broadcom BCM957608-P2200GQF00 dual-port 200G Ethernet PCIe NIC represents a comprehensive solution for enterprise data center network infrastructure modernization. This high-performance network interface card combines hardware-accelerated RDMA over Converged Ethernet (RoCE v2) with silicon-anchored security features, making it ideal for GPU-accelerated computing clusters, hyperconverged infrastructure deployments, and distributed AI training workloads.

Key Technology Differentiators

- Hardware-based congestion control algorithms for ultra-low latency RDMA communications

- TruFlow™ stateful flow processing offload reducing CPU utilization in virtualized environments

- Silicon root of trust (RoT) with secure boot attestation for zero-trust network architecture

- PCIe Gen4/Gen5 optimized data path supporting lossless Ethernet with priority flow control

- SR-IOV hardware virtualization enabling multi-tenant cloud infrastructure scaling

Deployment Scenarios

- Machine learning model training clusters requiring high-bandwidth inter-node communication

- Hyperscale cloud service provider infrastructure with multi-gigabit tenant isolation

- High-frequency trading (HFT) networks demanding microsecond-level latency consistency

- Software-defined storage (SDS) backends for distributed file systems and object storage

- Container orchestration platforms (Kubernetes, OpenShift) with network function virtualization

- Edge computing gateways processing real-time analytics and IoT data streams

NIC Advantages

- Hardware-accelerated packet processing pipelines vs. software-based solutions

- Integrated network telemetry and flow visibility for application performance monitoring

- Lossless Ethernet with DCB (Data Center Bridging) eliminating packet drops under congestion

- DPDK and SPDK compatibility for userspace networking and storage acceleration

- InfiniBand-class RDMA performance over standard Ethernet infrastructure

BCM957608-P2200GQF00 Frequently Asked Questions | FAQs

General Product Information

Q: What is the BCM957608-P2200GQF00? A: The BCM957608-P2200GQF00 is a dual-port 200G Ethernet PCIe network interface card (NIC) designed for high-performance data center applications including AI/ML, cloud computing, HPC, and storage environments.

Q: What does the "2 x 200G" configuration mean? A: This NIC provides two independent 200 Gigabit Ethernet ports, delivering a total bandwidth capacity of 400 Gbps across both ports. Each port can operate independently at full 200G speeds.

Q: What PCIe generation and lane configuration does this adapter use? A: While not explicitly stated in the specifications, adapters of this bandwidth class typically require PCIe 4.0 x16 or PCIe 5.0 x8/x16 to achieve full performance without bottlenecks.

Technical Specifications

Q: What is RoCE and why is it important? A: RoCE (RDMA over Converged Ethernet) is a network protocol that allows direct memory access between servers over Ethernet networks. The BCM957608 supports 4th generation RoCE with hardware-based congestion control, providing ultra-low latency communication essential for AI/ML workloads and high-performance computing.

Q: What is TruFlow™ technology? A: TruFlow™ is Broadcom's flow processing offload technology that moves network flow management from the CPU to dedicated hardware on the NIC. This increases virtual machine density and frees up CPU resources for application processing.

Q: What hardware acceleration features are included? A: The adapter includes multiple hardware acceleration engines for network processing tasks, congestion control, flow processing, and security functions, all designed to reduce CPU overhead and improve overall system performance.

Compatibility and Requirements

Q: What server platforms are supported? A: The adapter is designed for enterprise servers supporting PCIe 4.0/5.0 interfaces. Specific compatibility should be verified with server vendors, but it's typically compatible with major x86 server platforms from Dell, HPE, Lenovo, and others.

Q: What operating systems are supported? A: Support typically includes major enterprise operating systems such as Linux distributions (RHEL, Ubuntu, SUSE), Windows Server, and VMware vSphere. Specific driver availability should be confirmed with Broadcom.

Q: What cable types and distances are supported? A: Supports QSFP56 transceivers including 200GBASE-SR4 (OM4 multimode fiber up to 100m), 200GBASE-LR4 (single-mode fiber up to 10km), 200GBASE-DR4, and passive/active copper DAC cables. Compatible with MPO/MTP fiber connectivity and breakout configurations for 4x50G or 2x100G port splitting.

Performance and Optimization

Q: What is the maximum throughput per port? A: Each port supports up to 200 Gbps line rate throughput with hardware acceleration for various network processing tasks.

Q: How does hardware-based congestion control benefit performance? A: Hardware congestion control provides faster response times to network congestion events compared to software-based solutions, resulting in lower latency and more predictable performance in high-traffic scenarios.

Q: What is the typical latency performance? A: While specific latency figures aren't provided, the hardware-based RoCE implementation and dedicated acceleration engines are designed to deliver sub-microsecond latencies for RDMA operations.

Security Features

Q: What security features are included? A: The adapter includes a silicon-based root of trust (RoT) that provides secure boot capabilities and hardware attestation. This creates a hardware-anchored security foundation that's more robust than software-only security measures.

Q: How does the root of trust work? A: The silicon root of trust provides cryptographic verification of the adapter's firmware and drivers during boot, ensuring the integrity of the network stack and preventing unauthorized modifications.

Deployment and Use Cases

Q: What are the primary use cases for this adapter? A: The BCM957608 targets distributed GPU training clusters, hyperconverged infrastructure (HCI), NVMe-oF storage fabrics, container networking (CNI), edge AI inference appliances, financial trading systems, and telco network function virtualization (NFV) deployments requiring deterministic latency and high packet per second (PPS) rates.

Q: Is this suitable for virtualized environments? A: Yes, the TruFlow™ technology and hardware acceleration features are specifically designed to improve performance in virtualized environments by increasing VM density and reducing CPU overhead.

Q: How does this adapter benefit AI/ML workloads? A: The adapter accelerates distributed training frameworks (TensorFlow, PyTorch, MXNet) through NCCL (NVIDIA Collective Communications Library) optimization, supports GPUDirect RDMA for zero-copy GPU-to-GPU communication, enables model parallelism and data parallelism scaling, and provides deterministic network performance for synchronous SGD (Stochastic Gradient Descent) algorithms.

Installation and Configuration

Q: What tools are available for configuration and monitoring? A: Broadcom typically provides management utilities, driver packages, and integration with standard network management tools. Specific tools should be confirmed with current Broadcom documentation.

Q: Is SR-IOV supported for virtualization? A: While not explicitly mentioned, adapters in this class typically support SR-IOV (Single Root I/O Virtualization) for efficient resource sharing in virtualized environments.

Q: Is DPDK support included? A: Yes, the adapter includes optimized DPDK poll mode drivers (PMD) for userspace packet processing, supports DPDK testpmd benchmarking, integrates with OVS-DPDK for software-defined networking, and provides SPDK NVMe-oF target acceleration for storage disaggregation architectures.

Q: What virtualization features are supported? A: The adapter supports SR-IOV with up to 128 virtual functions (VF), VXLAN/NVGRE tunnel offloads, VMware NSX-T integration, OpenStack Neutron ML2 drivers, and hardware-accelerated container networking for Docker and Kubernetes CNI plugins.

Troubleshooting and Support

Q: What happens if one port fails? A: The dual-port design provides redundancy - if one port fails, the other can continue operating independently, though total bandwidth would be reduced to 200G.

Q: How is the adapter cooled? A: The adapter includes integrated cooling solutions designed for standard server environments. Proper server airflow and cooling should be maintained for optimal performance.

Q: Where can I get technical support? A: Technical support is available through Broadcom's support channels. In addition, our Engineers are available to assist with set up and configuration.

Purchasing and Availability

Q: What's included in the package? A: Typically includes the PCIe adapter card, low-profile bracket, documentation, and driver media. Cables and transceivers are usually sold separately.

Q: Is this adapter available for evaluation? A: Please connect with us from the contact menu option at the top of this page regarding evaluation and or demos'.

Q: What is the warranty coverage? A: Standard warranty terms apply as per Broadcom's warranty policy. Specific terms should be confirmed at time of purchase.

More Deployment Scenarios for BCM957608-P2200GQF00:

GPU to GPU server networking (scale-out and front-end)

Artificial Intelligence (AI) and Machine Learning (ML)

High-performance computing (HPC), Cloud, high density computing & data center servers

Network Function Virtualization

NVMe storage disaggregation

Storage servers

Contact us with questions or request a formal quote for procurement.

Share